My 2026 Coding Stack

Why I Stick With Flexibility Over Hype

It’s the end of the year and, as usual, we’re all doing life wrap-ups. Spotify Wrapped comes out (not that relevant since I switched to Apple Music), Letterboxd Wrapped comes out, people make reels with their travel collections, and so on.

There are definitely many more philosophical things to reflect on, but in this post I want to talk to you about how my approach to programming has changed over the course of this year.

The Hype Cycle and Tool Longevity

I don’t want to give a history lesson by listing the step-by-step evolution of these tools over the years because, quite simply, many of these tools have a very short life expectancy. So it’s better to focus on what’s relevant right now. Just think of the Windsurf editor. A year ago it seemed like it could capture a large part of the market, but it was the usual hype generated by content creators trying to hype up new technology and make you feel like you’re falling behind the industry. In the Stack Overflow 2025 Developer Survey released at the end of July, Windsurf had adoption under 5%. On Reddit, the Windsurf page has 12k weekly visitors (as of December 29th), which—compared to Cursor’s 121k—shows that it’s become a niche tool and I wouldn’t bet on its future.

My Early AI Experience

ChatGPT came out at the end of 2022, and it seems like a lifetime ago, but until 2025 I was pretty stubborn about using AI. I used it very naively—just pasting stack traces into ChatGPT to fix some errors and getting help with bits of code from Copilot’s autocomplete, which my company provided me. Nothing earth-shattering, and honestly I used the chat more for searching recipes and planning trips.

But ever since this tweet gave rise to the “vibe coding” movement, I’ve increasingly felt like I was missing out on something big.

There's a new kind of coding I call "vibe coding", where you fully give in to the vibes, embrace exponentials, and forget that the code even exists. It's possible because the LLMs (e.g. Cursor Composer w Sonnet) are getting too good. Also I just talk to Composer with SuperWhisper…

— Andrej Karpathy (@karpathy) February 2, 2025

Finding My Stack

From February 2025 to July I tried many tools, but none convinced me. I tried Tabby, but it was a disaster. It wasn’t even remotely comparable to Copilot. I subscribed to ChatGPT and went on with the desktop client integration with VS Code. I liked it, but it was very limited.

I tried Cursor and I liked it a lot, but paying a subscription just for coding didn’t sit right with me. Then I finally arrived at the stack I’m using now.

A huge disclaimer: I’m not cheap (“spilorcio”, “braccino corto” in Italian - ed) in everyday life, but for some reason when it comes to using AI I’m extremely careful about expenses.

That said…

My Current Stack

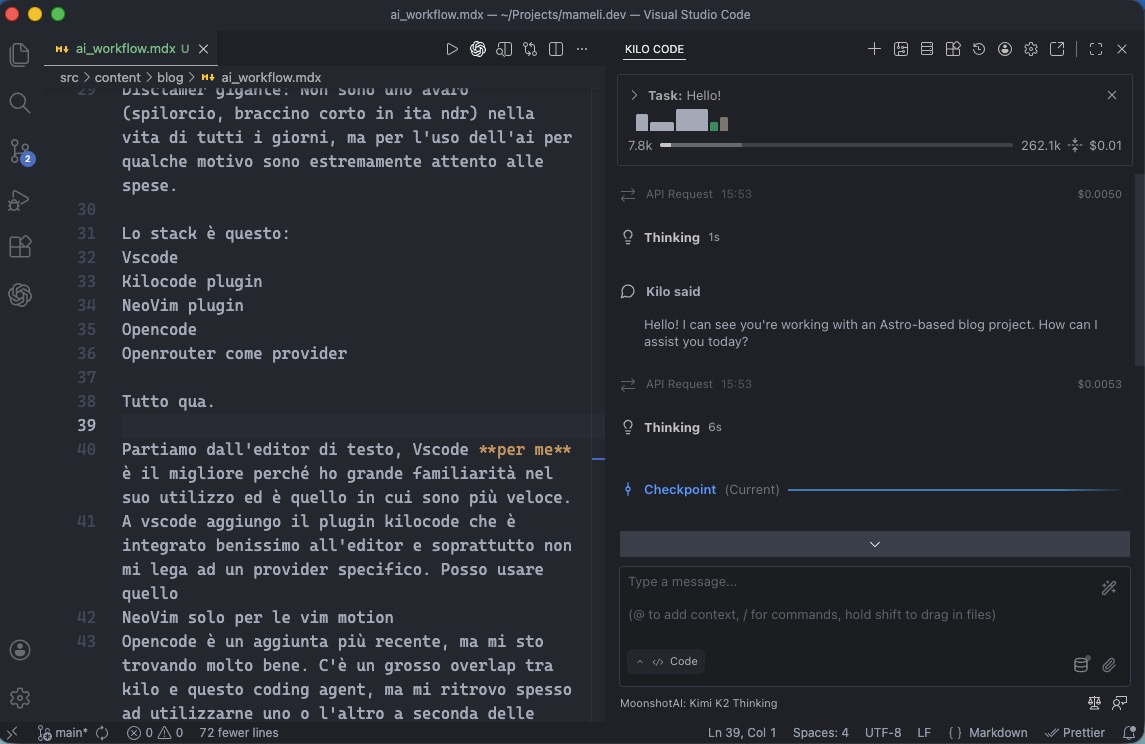

This is the stack:

- VS Code

- KiloCode plugin

- Neovim plugin

- OpenCode

- OpenRouter as provider

That’s it.

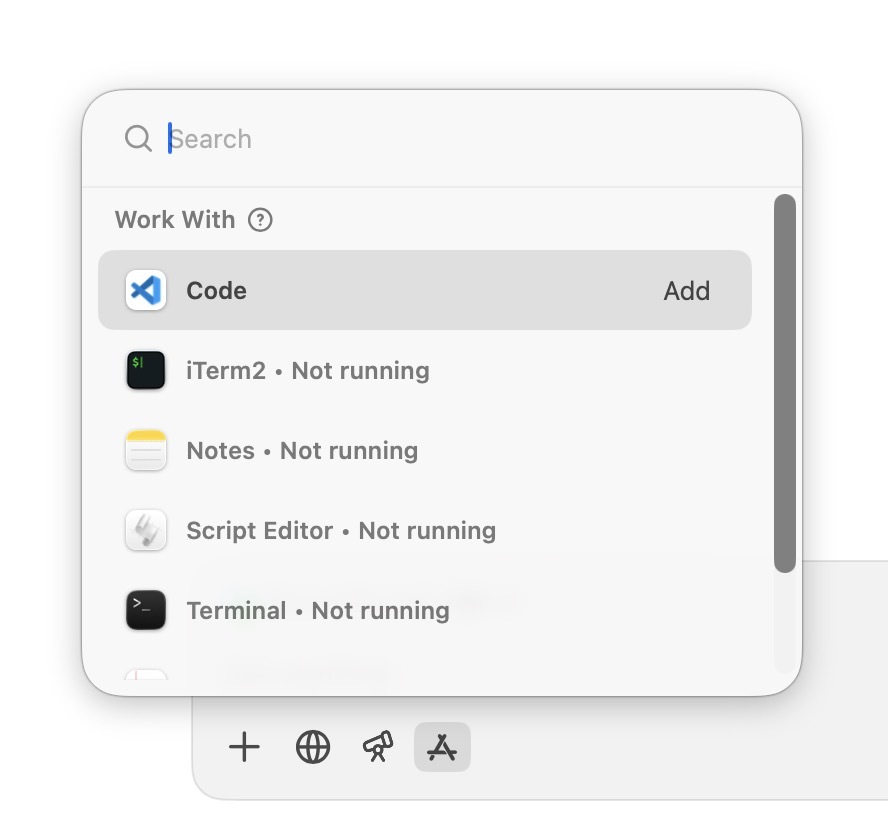

Let’s start with the text editor, VS Code. For me it’s the best because I have great familiarity with its use and it’s where I’m fastest.

To VS Code I add the KiloCode plugin, which is very well integrated with the editor and, above all, doesn’t tie me to a specific provider. I can use any provider I have credits for.

I use the Neovim plugin for vim motions and because I’m a fan of ThePrimeagen.

OpenCode is a more recent addition, but I’m finding myself doing very well with it. There’s a big overlap between KiloCode and this coding agent, but I often find myself using one or the other depending on the situation. A very useful feature of OpenCode is that it also uses the Language Server Protocol (LSP) to help the model interact with the code. As a terminal I use Ghostty.

Finally, the best discovery I made this year was finding OpenRouter. In such a dynamic environment as AI, I’ve always felt constrained by having to subscribe to or add credits to OpenAI or Anthropic or Google. With this provider I solve the problem because I can use all the models I want and the newest ones are added within a few days at most. I’m happy to pay 5% more to have this freedom of choice. Returning to the disclaimer, I can also add that I can use open source models or, in general, very efficient models. In these last months I’ve often found myself using, for example, Grok Code Fast because: 1) it’s fast (duh?), 2) it costs little, and 3) I find it concise and direct.

Choosing the Right Model

Since we’re at it, let’s also talk about the underlying models. Which models do I use? All of them. Which is the best? It depends. The beautiful thing about having a provider like OpenRouter is that I’m not tied to one model or another, so I use the one I feel might perform better for that specific task. If I need to write code, I often use Grok Code. If I need to translate something or write text, I have a preference for Gemini models, and so on for all tasks. This approach of mine was also highlighted in this article—very interesting from OpenRouter itself.

I quote:

The “flight to quality” has not led to consolidation but to diversification.

This pluralism suggests users are actively benchmarking across multiple open large models rather than converging on a single standard.

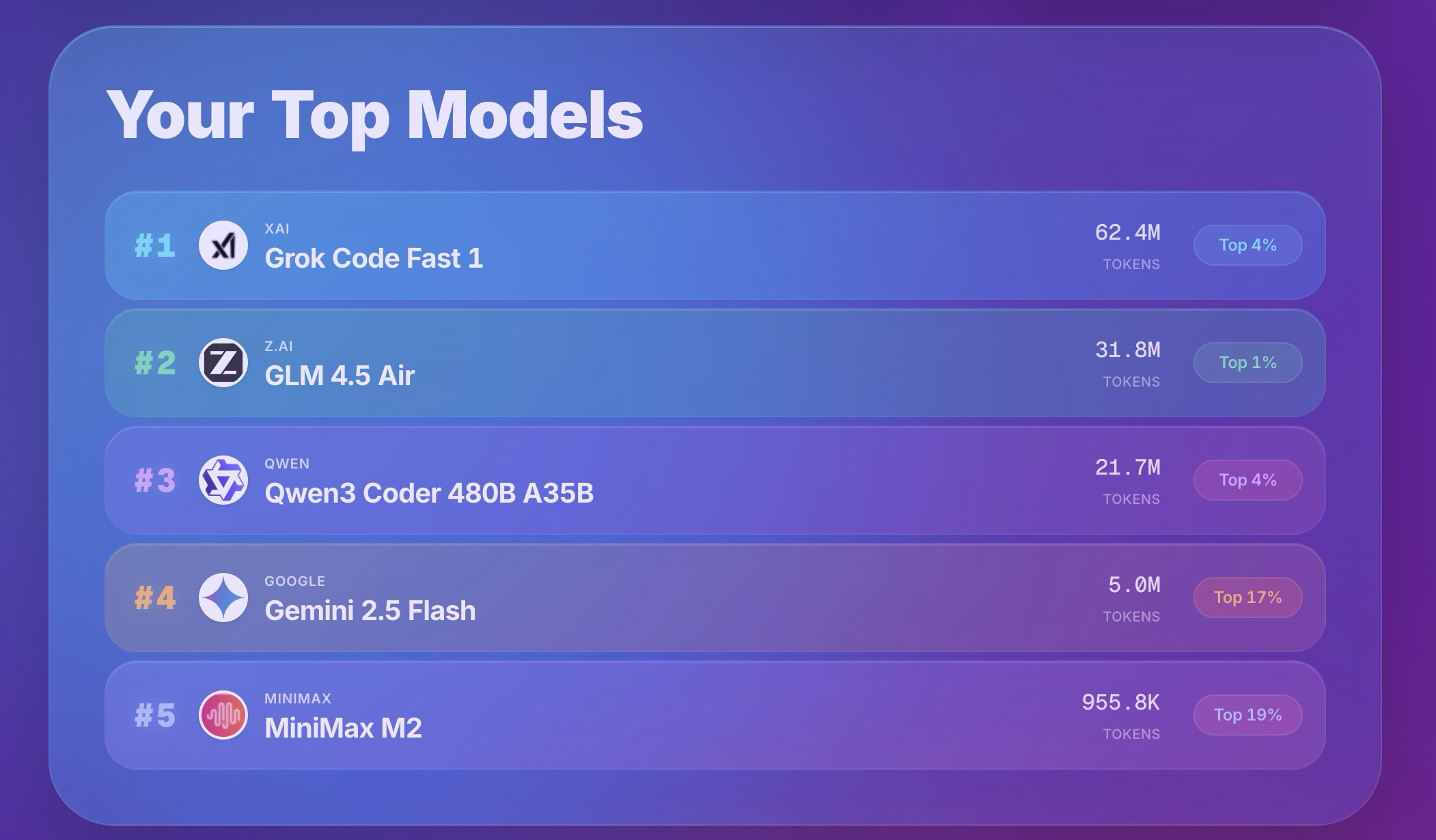

That said, I’ve done well with Z.Ai models like GLM 4.5 Air (now 4.7 has come out and I want to try it), Gemini specifically the 2.5 Flash version, and as already mentioned, X.ai models like Grok Code and Grok 4.x.

This is my wrapped of the models I’ve used the most this year:

I practically never use Anthropic models because they’re super expensive and not worth using. I found myself using Claude Sonnet as a last resort after cheaper models had failed, but it still didn’t manage to turn the situation around, spending many credits uselessly.

For models I always try to take a look at these sources to see if something new has come out:

- LM Arena

- OpenRouter rankings

- Hacker News

- Theo’s YouTube channel is a meme at this point

Why Am I Sticking With This AI Coding Stack?

Why do I use these open source tools that all have their integration flaws? Why haven’t I switched to a more complete solution like Cursor, even though I believe it’s the easiest choice for anyone wanting to adopt AI in their coding? Why have I been out of the hype cycle of various creators for months and haven’t even tried Antigravity?

I think that at this moment, for me, it’s more important to learn how to use this stack to its best instead of immediately switching to something new. Both in KiloCode and OpenCode I’ve defined custom agents as I found common use cases (text translation, editor for posts, code debugger), and only by using these tools over time have I been able to expand the ecosystem and optimize their use. If I switch to a new tool every time, I’m forced to learn again how to use it, and I don’t want to constantly be in the condition of having to adapt.

Now I feel confident with these tools that I know well and I know how to use and extend them, and thanks to this familiarity I’m appreciating more and more the results obtained, which then go hand in hand with the evolution of the models themselves.

Looking Ahead to 2026

I’ll certainly change my mind in the future because better solutions will come out. I’m keeping an eye on Zed, for example, but the coding agent still doesn’t convince me much. It’s a shame because I like the rest of the editor a lot. Despite not being a fan of Anthropic models, I’d also like to try Claude Code, which recently has as a feature the use of LSP. Who knows what 2026 has in store for us. But as Battisti said, we will only find out by living (“Lo scopriremo solo vivendo”).